Self-Driving Cars: Technology Breakdown & Future Outlook

Self-driving cars harness a complex interplay of advanced sensors, artificial intelligence, and sophisticated software to perceive their environment, make real-time decisions, and navigate autonomously, fundamentally changing the future of transportation.

In a world rapidly advancing toward unprecedented automation, few technological marvels captivate the public imagination quite like the self-driving car. What once seemed confined to the realm of science fiction is now becoming an increasingly tangible reality, promising to reshape our daily commutes, logistics, and even urban planning. But beyond the flashy demonstrations and futuristic concepts, lies a complex tapestry of engineering and computational prowess that makes autonomous vehicles possible. This comprehensive article delves into Understanding the Technology Behind Self-Driving Cars: A Comprehensive Overview, exploring the intricate components and groundbreaking innovations propelling this revolution on wheels.

The Foundation: Sensory Perception Systems

At the heart of any autonomous vehicle lies its ability to “see” and “understand” its surroundings. This is achieved through a diverse array of advanced sensors, each contributing a crucial piece to the vehicle’s real-time environmental model. Just as human drivers rely on their eyes and ears, self-driving cars synthesize data from multiple input streams to create a comprehensive, 360-degree awareness of the road, other vehicles, pedestrians, and obstacles.

No single sensor system can provide all the necessary information for safe and reliable autonomous operation. Instead, a multi-modal approach is adopted, leveraging the strengths of each technology while mitigating their individual limitations. This redundancy and diversity are critical for robustness, especially in challenging weather conditions or complex urban environments. The integration of these systems is a testament to the sophisticated engineering involved in autonomous vehicle development.

Lidar: The Eyes of the Self-Driving Car

Lidar, an acronym for “Light Detection and Ranging,” functions much like radar, but with light instead of radio waves. It emits pulsed laser light into the environment and measures the time it takes for these pulses to return. By doing so, Lidar creates highly detailed 3D maps of the surroundings, providing precise distance measurements and object shapes. This technology is particularly adept at:

- Generating dense point clouds: Billions of data points create a virtual representation of the world.

- Operating in varying light conditions: Lidar is less affected by shadows or direct sunlight compared to cameras.

- Accurate distance measurement: Crucial for obstacle detection and avoidance.

Despite its precision, Lidar can be impacted by fog, heavy rain, or snow, as these atmospheric conditions can scatter the laser beams, reducing range and accuracy. Therefore, it must be complemented by other sensor types to ensure continuous performance.

Radar: All-Weather Detection

Radar, or “Radio Detection and Ranging,” uses radio waves to detect objects and measure their speed and distance. Unlike Lidar, radar waves are less susceptible to adverse weather conditions such as fog, rain, or snow, making it an invaluable component for all-weather driving capabilities. Radar sensors are primarily used for:

- Long-range object detection: Identifying vehicles and obstacles far ahead.

- Speed measurement: Crucial for adaptive cruise control and collision warning systems.

- Blind spot monitoring: Covering areas not easily visible to the driver or other sensors.

While excellent for distance and speed, radar typically provides lower resolution and less detailed shape information compared to Lidar, meaning it may struggle to distinguish between closely spaced objects or identify their exact form.

Cameras: Visual Perception and Recognition

Cameras, similar to the human eye, capture visual information of the environment. Self-driving cars employ multiple cameras, strategically placed around the vehicle to provide a complete 360-degree view. These cameras typically come in two forms: monocular (single lens) and stereo (two lenses working together to perceive depth). Their primary functions include:

- Lane keeping: Identifying lane markings and road boundaries.

- Traffic sign recognition: Reading and interpreting speed limits, stop signs, and other regulatory signs.

- Pedestrian and vehicle classification: Distinguishing between different types of road users and obstacles.

Cameras excel at object classification and semantic understanding of the scene, interpreting complex visual cues that Lidar and radar might miss. However, their performance can be severely hampered by poor lighting conditions, glare from the sun, or severe weather.

Ultrasonic Sensors: Proximity Detection

Ultrasonic sensors emit high-frequency sound waves and measure the time it takes for the echo to return. These sensors are short-range and are primarily used for parking assistance, low-speed maneuvers, and detecting objects very close to the vehicle. They are particularly useful for tasks such as:

- Parallel parking assistance: Detecting curbs and other vehicles.

- Blind spot monitoring at low speeds: Preventing minor collisions.

- General proximity sensing: Alerting the vehicle to immediate obstacles.

Their limited range makes them unsuitable for high-speed driving, but they are an essential component of the vehicle’s low-speed spatial awareness.

The Brain: Artificial Intelligence and Machine Learning

Raw sensor data, no matter how abundant, is meaningless without a sophisticated “brain” to process it. This is where artificial intelligence (AI) and machine learning (ML) come into play, serving as the cognitive powerhouse of the self-driving car. AI algorithms analyze the vast streams of data from Lidar, radar, cameras, and ultrasonic sensors, interpreting the environment, predicting behaviors, and making real-time decisions.

The development of these AI systems involves extensive training on massive datasets, including hours of real-world driving footage, simulated scenarios, and lidar point clouds. This training enables the AI to recognize patterns, adapt to new situations, and learn from its experiences, continuously improving its driving capabilities.

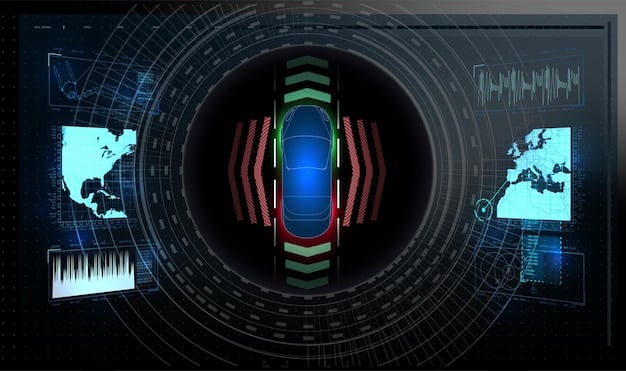

Perception: Understanding the World

The perception module is arguably the most critical component of the AI system. It takes fragmented sensor data and fuses it into a coherent, dynamic model of the vehicle’s immediate surroundings. This involves several sub-tasks:

- Object Detection and Tracking: Identifying and continuously monitoring the position, velocity, and trajectory of other vehicles, pedestrians, cyclists, and static obstacles. Deep learning models, particularly convolutional neural networks (CNNs), are highly effective here.

- Lane and Road Boundary Detection: Accurately identifying lane markings, road edges, and other road features to keep the vehicle within its designated path.

- Traffic Light and Sign Recognition: Interpreting the state of traffic lights and understanding the meaning of various road signs in real-time.

The ability to fuse data from disparate sensor types—combining the precise 3D mapping of Lidar with the all-weather capabilities of radar and the semantic understanding of cameras—is what gives the self-driving car its robust perception. This sensor fusion creates a more complete and reliable understanding of the environment than any single sensor could provide.

Prediction: Anticipating Future Movements

Once the perception system understands the current state of the world, the prediction module takes over. This module forecasts the likely future actions of other road users (e.g., will that car ahead change lanes? Is that pedestrian about to cross the street?). This is a probabilistic process, as human behavior is inherently unpredictable, but effective prediction is vital for safe navigation. Factors influencing prediction include:

- Historical movement patterns: Learning typical pedestrian and vehicle behaviors.

- Intent estimation: Inferring likely actions based on motion vectors, turn signals, and context.

- Interaction models: Understanding how different road users interact with each other and the autonomous vehicle.

Accurate prediction allows the self-driving car to anticipate potential hazards and plan its maneuvers proactively, ensuring smooth and safe interactions within complex traffic scenarios. This proactive approach distinguishes sophisticated autonomous systems from simpler driver-assistance features.

Planning and Control: Decision-Making and Execution

The planning and control module is the decision-maker and executor. Based on the perceived environment and predicted actions, it determines the optimal driving strategy, generating a safe, comfortable, and efficient trajectory for the vehicle. This involves:

- Route Planning: Determining the optimal path from origin to destination, considering traffic, road conditions, and user preferences. This is typically done at a higher level, involving map data and real-time traffic information.

- Behavior Planning: Deciding on high-level maneuvers such as lane changes, turns, stops, and accelerations in response to dynamic situations. This module also handles tricky scenarios like merging into traffic or navigating intersections.

- Motion Planning: Translating behavior plans into a tangible, smooth, and collision-free path that the vehicle’s actuators can follow. This involves generating many potential trajectories and selecting the safest and most efficient one.

- Vehicle Control: Executing the planned trajectory by sending commands to the vehicle’s steering, acceleration, and braking systems. This control needs to be precise and responsive, accounting for vehicle dynamics and external disturbances.

The interplay between these planning layers ensures that the vehicle not only reaches its destination but does so safely and efficiently, adapting to continuously changing road conditions and unexpected events.

Mapping and Localization: Knowing Where You Are

For a self-driving car to navigate effectively, it needs to know precisely where it is in the world and what the road network looks like. This is achieved through highly detailed maps and sophisticated localization techniques.

High-Definition (HD) Maps

Unlike the standard GPS maps used in conventional navigation systems, self-driving cars rely on High-Definition (HD) maps. These maps are significantly more detailed, containing centimeter-level accuracy for features such as:

- Lane geometry: Exact curvature, width, and number of lanes.

- Road infrastructure: Traffic signs, traffic lights, crosswalks, and even individual poles.

- Elevation and curvature: Detailed topographical information.

- Static obstacles: Curbs, barriers, and permanent structures.

HD maps act as a prior knowledge base, allowing the vehicle to anticipate road features and plan maneuvers well in advance. They provide a precise reference point against which the live sensor data can be compared, enhancing the accuracy of perception and prediction.

Localization Techniques

While HD maps provide the static context, localization is the process of pinpointing the vehicle’s exact position on that map in real-time. This is crucial for precise navigation and adherence to lane boundaries. Common localization techniques include:

- GPS with Corrections: Standard GPS alone is not accurate enough for autonomous driving. However, differential GPS (DGPS) or real-time kinematic (RTK) GPS, combined with correction signals, can achieve centimeter-level accuracy.

- Lidar Localization (Scan Matching): The vehicle constantly compares the 3D point clouds generated by its Lidar sensors to the features encoded in the HD map. By matching these live scans with the map, the vehicle can determine its precise location.

- Visual Localization (Vision-based Odometry): Cameras can also be used for localization, identifying unique visual landmarks (e.g., buildings, trees, specific road markings) and comparing them against a visual database or the HD map.

- Inertial Measurement Units (IMUs): These sensors measure the vehicle’s acceleration and angular velocity, providing dead reckoning capabilities to track movement and orientation. While prone to drift over time, IMUs are essential for maintaining localization accuracy during brief sensor outages or in areas with poor GPS signals.

The combination of these localization methods ensures continuous and accurate positioning, even in challenging environments like urban canyons or tunnels where GPS signals may be intermittent. The redundancy built into these systems offers an added layer of safety.

Vehicle Actuation and Control Systems

Even with advanced perception and planning systems, a self-driving car is useless without the ability to physically execute its decisions. This is where the vehicle’s actuation and control systems come into play. These systems receive commands from the central AI and translate them into physical actions, controlling the vehicle’s movement.

Modern vehicles often come equipped with “drive-by-wire” systems, meaning that the traditional mechanical linkages between the steering wheel, brake pedal, and accelerator are replaced with electronic signals. This makes it easier for the autonomous system to directly control these functions. The vehicle’s onboard computers precisely manage:

Steering Control

The steering system receives commands from the planning module and precisely adjusts the wheels’ angle to follow the intended path. This requires highly responsive and accurate electromechanical steering systems that can make minute adjustments to maintain lane centering or execute complex maneuvers like turns and lane changes. The system must also account for vehicle speed, road surface conditions, and tire grip to ensure stability.

Braking Control

The autonomous braking system is crucial for safety and deceleration. It can apply the brakes independently to maintain safe following distances, stop at traffic lights, or avoid obstacles. This is often an extension of existing anti-lock braking systems (ABS) and electronic stability control (ESC), providing precise and modulated braking force. Regenerative braking, common in electric vehicles, also plays a role in energy efficiency.

Acceleration Control

The acceleration system controls the vehicle’s speed, whether increasing it to match traffic flow or maintaining a constant speed through adaptive cruise control. This involves managing the engine’s throttle or, in electric vehicles, the power delivery to the electric motors. Smooth acceleration and deceleration are critical for passenger comfort and efficient energy consumption. The system must also be able to react quickly to changes in speed limits or traffic dynamics.

These control systems work in a continuous feedback loop, constantly comparing the vehicle’s actual state (position, speed, heading) with the desired trajectory and making micro-adjustments to stay on course. This closed-loop control is fundamental to the smooth and safe operation of self-driving cars.

Communication and Connectivity: The Networked Car

While a self-driving car can operate autonomously based on its onboard sensors and AI, its capabilities are significantly enhanced by communication with external entities. Connectivity allows for real-time updates, enhanced situational awareness, and more efficient traffic flow.

Vehicle-to-Everything (V2X) Communication

V2X communication refers to the overarching framework where vehicles can communicate with other vehicles (V2V), infrastructure (V2I), pedestrians (V2P), and the network (V2N). This broad communication capability opens up new possibilities for safety and efficiency:

- V2V (Vehicle-to-Vehicle): Allows cars to share real-time information with each other, such as speed, heading, braking events, and hazards ahead. This can provide a more immediate and comprehensive picture of traffic conditions than onboard sensors alone, especially in complex scenarios or beyond the line of sight (e.g., around a blind corner).

- V2I (Vehicle-to-Infrastructure): Enables vehicles to communicate with smart road infrastructure like traffic lights, road signs, and construction zones. This can facilitate optimized traffic flow, inform drivers of upcoming conditions, or even allow vehicles to “see” traffic light changes in advance.

- V2P (Vehicle-to-Pedestrian): Allows communication with vulnerable road users, either directly through their smartphones or wearables, or via vehicle-mounted sensors that detect their presence. This enhances pedestrian safety by providing warnings to both the vehicle and the pedestrian.

- V2N (Vehicle-to-Network): Connects the vehicle to cloud services for map updates, software over-the-air (OTA) updates, traffic information, and remote diagnostics. This ensures the vehicle’s systems are always up-to-date and have access to the latest data.

The development of 5G cellular networks is pivotal for V2X, providing the necessary low latency and high bandwidth to support these critical communications. This interconnected ecosystem forms the backbone of future smart cities and autonomous transportation systems.

Data Security and Privacy

With increased connectivity comes the critical need for robust cybersecurity. Self-driving cars process vast amounts of sensitive data, and any breach could have severe consequences, compromising safety or personal privacy. Ensuring the integrity and confidentiality of this data is paramount. Measures include:

- Encryption: Protecting data during transmission and storage.

- Secure Booting: Ensuring only legitimate software runs on the vehicle’s systems.

- Intrusion Detection Systems: Monitoring for and responding to unauthorized access attempts.

The industry is working closely with cybersecurity experts and regulatory bodies to develop stringent standards and protocols to protect autonomous vehicle systems from malicious attacks and ensure public trust.

Software and Computing Hardware: The Architecture Enablers

The sophisticated operations of a self-driving car are powered by a highly specialized software stack running on powerful, purpose-built computing hardware. This combination represents the core operational environment for the AI and control systems.

The Software Stack

The software stack is a complex hierarchy of programs and algorithms designed to manage every aspect of autonomous driving. It includes:

- Operating System (OS): A real-time operating system (RTOS) or a specialized Linux distribution often forms the base, ensuring deterministic and low-latency execution of critical functions.

- Middleware: Facilitates communication and data exchange between different software modules, ensuring seamless integration of sensor data, perception outputs, planning decisions, and control commands.

- Application Layer: Contains the core AI algorithms for perception, prediction, planning, and control, developed by autonomous driving companies. This layer also includes navigation, user interface, and diagnostic functionalities.

- Safety and Redundancy Modules: These are critical components designed to detect failures, enable fail-operational capabilities, and provide fallback mechanisms to ensure safe system behavior even in the event of component failure.

The development and validation of this software stack are incredibly complex, requiring millions of lines of code and rigorous testing in both simulation and real-world environments. Continuous integration and over-the-air updates are crucial for improving performance and adding new features.

High-Performance Computing Hardware

Processing the immense volume of data generated by sensors and executing complex AI models in real-time requires significant computational power. Self-driving cars are equipped with specialized computing platforms designed for high throughput and low latency. These typically include:

- Graphics Processing Units (GPUs): Specialized for parallel processing, GPUs are ideal for accelerating deep learning algorithms used in perception and prediction.

- Application-Specific Integrated Circuits (ASICs): Custom-designed chips optimized for specific AI tasks, offering superior efficiency and performance compared to general-purpose processors for highly repetitive computations.

- Field-Programmable Gate Arrays (FPGAs): Reconfigurable hardware that can be programmed for specific tasks, providing flexibility and high performance for certain real-time computations.

These computing platforms are also designed to be highly reliable, fault-tolerant, and capable of operating within the harsh environmental conditions of a vehicle (e.g., extreme temperatures, vibrations). The entire hardware ecosystem is built for robustness and redundancy to prevent catastrophic failures, ensuring continuous, safe operation.

Ethical Considerations and Societal Impact

Beyond the technical prowess, the deployment of self-driving cars raises profound ethical questions and promises significant societal shifts. These are not merely engineering challenges but complex dilemmas that require careful consideration and public discourse.

Ethical Dilemmas: The Trolley Problem on Wheels

Perhaps the most widely discussed ethical dilemma is the “trolley problem” applied to autonomous vehicles. In an unavoidable accident scenario, how should a self-driving car be programmed to prioritize lives? Should it protect its occupants at all costs, or minimize harm to the greatest number of people, even if it means sacrificing its passengers? These are not trivial questions, and there is no universally agreed-upon answer. The programming of such moral judgments requires careful consideration of:

- Utilitarianism vs. Deontology: Whether to prioritize the greatest good or adhere to strict moral rules.

- Human life valuation: The inherent difficulty in assigning value to different lives (e.g., young vs. old, pedestrian vs. occupant).

- Transparency and accountability: Who is responsible when an autonomous vehicle causes an accident? The manufacturer, the software developer, the owner, or the vehicle itself?

The industry is actively engaging with ethicists, legal experts, and the public to develop frameworks and guidelines for these challenging situations, striving for solutions that align with societal values and build public trust.

Societal Impacts: More Than Just Transport

The widespread adoption of self-driving cars has the potential to alter society in numerous ways, extending far beyond simply getting from point A to point B:

- Urban Planning: Reduced need for parking spaces, potentially freeing up valuable urban real estate for other uses. The design of roads and cities might also evolve to accommodate autonomous flows.

- Accessibility: Enhanced mobility for the elderly, disabled, and those unable to drive, fostering greater independence and inclusion. This could significantly improve quality of life for millions.

- Traffic Congestion: Potentially smoother traffic flow through optimized routing and coordinated movements, leading to reduced congestion and travel times. Platooning (vehicles driving in close formation) could further enhance road capacity.

- Economic Shifts: Impact on industries like car ownership, insurance, public transport, and various sectors of the gig economy. New business models, such as Mobility-as-a-Service (MaaS), could emerge.

- Safety: A significant reduction in accidents caused by human error, potentially saving millions of lives and preventing countless injuries annually. This is often cited as the primary benefit.

Navigating these profound changes requires proactive policy-making, public education, and continuous adaptation from governments, industries, and communities to harness the benefits while mitigating potential disruptions.

| Key Component | Brief Description |

|---|---|

| 👁️🗨️ Sensor Perception | Lidar, radar, cameras, and ultrasonics gather environmental data. |

| 🧠 AI & Machine Learning | Processes data for perception, prediction, planning, and control. |

| 🗺️ Mapping & Localization | HD maps and real-time positioning for precise navigation. |

| 🚦 V2X Communication | Vehicle-to-everything communication enhances awareness and safety. |

Frequently Asked Questions About Self-Driving Cars

The Society of Automotive Engineers (SAE) defines six levels of driving automation, from Level 0 (no automation) to Level 5 (full automation where the vehicle can handle all driving tasks in all conditions). Most commercially available systems today are Level 2 (partial automation, requiring driver supervision), with Level 3 (conditional automation) slowly emerging in specific applications.

Self-driving cars rely on a fusion of multiple sensors. While cameras struggle in heavy rain or fog, radar and Lidar can often penetrate these conditions more effectively. Developers are also researching advanced sensing technologies like thermal cameras and specialized algorithms to improve performance in adverse weather, ensuring redundant perception systems.

The ultimate goal of self-driving cars is to significantly reduce accidents caused by human error, which accounts for over 90% of collisions. While current autonomous systems are still under development and testing, data from miles driven indicates a strong potential for improved safety, though challenges in specific edge cases persist.

AI is the brain of the self-driving car. It processes vast amounts of sensor data to perceive the environment, predicts the behavior of other road users, plans the vehicle’s trajectory, and executes control commands. Machine learning, a subset of AI, enables the system to learn from data and improve its driving capabilities over time.

HD maps offer centimeter-level accuracy, providing detailed information about lane geometry, road infrastructure (traffic lights, signs), and permanent objects. Unlike regular GPS maps, which are for general navigation, HD maps serve as a precise prior knowledge base, critical for accurate localization and proactive planning by autonomous vehicles.

Conclusion

Understanding the Technology Behind Self-Driving Cars: A Comprehensive Overview reveals a field driven by relentless innovation and meticulous engineering. From the intricate network of sensors that perceive the world to the AI brains that interpret it, and the precise control systems that act upon decisions, every component plays a critical role in bringing autonomous mobility to life. As these technologies continue to mature, addressing not only computational hurdles but also ethical considerations and societal impacts, the promise of safer, more efficient, and universally accessible transportation draws ever closer. The journey ahead is complex, but the foundational technology is robust, charting a course toward a future where our relationship with driving is fundamentally redefined.